I never got to see this press release before it was posted, so it was nice to read it for the first time. The guys over at IRIDAS have been so supportive, we hope to continue this type of innovation through partnership.

Read the release here at Studio Daily

Thursday, December 06, 2007

IRIDAS and CineForm Announce Full RAW and RGB Post Solution

Wednesday, November 28, 2007

New Concept Drawing for the CineForm HDMI DDR

A big thank you goes to Nathan Ottley who saw our poor concept drawing and re-did it with huge improvements. He even gave it a model number.

A big thank you goes to Nathan Ottley who saw our poor concept drawing and re-did it with huge improvements. He even gave it a model number.

Now to get this thing built and on the market.

Tuesday, November 13, 2007

CineForm on a Chip

Embedding CineForm compression algorithms in hardware may seem like an odd direction to go for a software company, particularly as we pride ourselves in offering very high performance software-only compression. Plus, software compression is getting easier, too, as CPUs continue their upward performance trend. When we first started HD capture in 2003, the quality modes we offered were lower than we recommend today (used on Dust to Glory, so still very nice) , and were designed for the fastest dual Opteron machines available at that time. Because the performance of Intel CPUs has risen rapidly in the last four years, we've allowed the visual quality of our compression algorithms to climb further as we take advantage of increasing compute power. Today, we offer greater quality on modern home dual-core PCs or fast laptops than we did four years ago on the fastest workstations money could buy.

Yet while Intel is delivering plenty of performance, our compression is still excluded from the embedded battery-operated market, such as camera-attached DDRs (see one DDR concept) or CineForm compression in RAW and HD cameras. (I feel we would be inside Red One if we had CineForm RAW in hardware back then.) While the Silicon Imaging camera (SI-2K) uses CineForm RAW software compression, much of the battery life is used to support a high-end mobile/embedded PC. Smaller cameras and smaller/lightweight DDRs need compression in silicon, so that is what CineForm is now pursuing.

For those thinking, "Why do I need CineForm hardware when I can get hardware compression for MPEG2, AVCHD, MJPEG and JPEG2000?" The answer is: for the same reason you use CineForm today. None of those formats are appropriate for editing and post production; all were designed with different purposes in mind, and all have some of the following issues: 8-bit only, slow decompression, long GOP structure, multi-generation compression loss, color sub-sampling, etc. CineForm was designed for post-production, so a CineForm compressed DDR or camera would suit the indie filmmaker far more than a FireStore or other camera-based compression. CineForm has become a very high-quality native acquisition format, making the issue of transcoding moot.

Thursday, September 27, 2007

Visually Lossless and how to back it up.

CineForm is the company most guilty of using the term Visually Lossless (VL)-- we may even have coined it. Red has benefited from CineForm's success with wavelets, and they also uses the term, as they should. But it is a marketing term, so we all should do our best to back it up. While visual inspection of 1:1 pixel data (or magnified) at 12" viewing distances is a good way to test for VL, there is a different way to measure the amount of compression that is significant, without involving human inspection. There is a point in most high bit-rate image compression technologies when the amount of loss is insignificant; this occurs when the compression error falls well below the inherent noise floor of the imaging device. Back in March '07, Scott Billups, George Palmer and Mark Chiolis helped us out with a Viper test shoot at Plus-8 to compare HDCAM-SR to CineForm 444 -- thanks again guys. Follow following this blog when remember many posts on the subject. Using a Billups Chart in front of the Viper in Filmstream mode, the stationary image noise power was measured in PSNR at 38.97dB -- this is the amount of difference between adjacent frames due to sensor noise or other environmentals, from an otherwise completely static image. Yet the difference between any uncompressed frame and the HDCAM-SR equivalent was 49.8dB, more than 10dB less distortion (massive) than the inherent noise level. CineForm 444 was 51.03db, better still (the higher number means less distortion.) While adjacent frame noise is not normally measured as a distortion, it is a very good indicator in the amount change that you must be less than for the image to look the same after compression -- assuming you are also trying to store the noise which I feel is an important part of being visually lossless. In film scans, the film grain is the noise floor you need to be less than, and the compression should preserve the shape of the grain to reach VL.

Back in March '07, Scott Billups, George Palmer and Mark Chiolis helped us out with a Viper test shoot at Plus-8 to compare HDCAM-SR to CineForm 444 -- thanks again guys. Follow following this blog when remember many posts on the subject. Using a Billups Chart in front of the Viper in Filmstream mode, the stationary image noise power was measured in PSNR at 38.97dB -- this is the amount of difference between adjacent frames due to sensor noise or other environmentals, from an otherwise completely static image. Yet the difference between any uncompressed frame and the HDCAM-SR equivalent was 49.8dB, more than 10dB less distortion (massive) than the inherent noise level. CineForm 444 was 51.03db, better still (the higher number means less distortion.) While adjacent frame noise is not normally measured as a distortion, it is a very good indicator in the amount change that you must be less than for the image to look the same after compression -- assuming you are also trying to store the noise which I feel is an important part of being visually lossless. In film scans, the film grain is the noise floor you need to be less than, and the compression should preserve the shape of the grain to reach VL.

Taking this test further, and I get little wacky, but please bear with me. I average 72 frames of the uncompressed stationary image to extract out the sensor noise -- the 72 gives and effective 37dB of noise suppression. This new average frame is the ideal frame; it is what the camera was trying to see if noise could be eliminated in the camera. When I compare each unique uncompressed frame to the ideal, we can now measure the uncompressed acquisition distortion, just like as it was a lossy format. The PSNR error between uncompressed signal and the ideal frame was 41.86dB. When you compare the same ideal frame to the compressed results, you get the values of 41.38dB and 41.63dB for HDCAM-SR and CineForm respectively (CineForm 444 was compressing to around 5:1 using Filmscan1 mode and HDCAM SR compressed to around 4:1.) The less than 0.5dB difference between the lightly compressed solutions and the uncompressed capture, is why CineForm and Sony SR can safely claim to be visual lossless.

Ideal image:

Uncompressed image:

HDCAM-SR image:

CineForm image:

Download the full screen DPX versions here.

Notes

1: See in the black background to see how much noise has been removed through averaging sequential frames.

2: The reduction in noise in the ideal image can make it look softer, as noise is often seen as a visual cue for sharpness--add some noise to it and will seem sharper again.

3: There is some edge enhancement that must have occurred in camera, as all the images show it, so it is not a compression artifact.

Update: 10/1/07, Here is a good write up on the Viper's edge enhancement that I commented on in note 3.

Friday, September 21, 2007

10-bit log vs 12-bit linear

I have written on the subject of linear vs. log encoding before, but the issue keeps coming up as more users attempt to compare REDCODE to CineForm RAW. While there is plenty of mythology around most of what RED does, the smoke around REDCODE is clearing, so comparisons will and are being made between the only two wavelet based RAW compressors in existence. While I would love to do a head-to-head test starting with the same uncompressed RAW frames, that will be difficult until the Red RAWPORT is ready. So this article does not attempt to do a quality comparison between REDCODE and CineForm RAW; instead I hope to reveal the impacts of 10-bit log (used by CineForm RAW) vs. 12-bit linear (publicly disclosed as used by REDCODE) as it relates to lossy* compression.

* Lossy - sounds bad doesn't it? But even visually lossless compression is not mathematically identical to the original. To reliably achieve image compression above about 2:1, a lossy compression is required.

In the cases of both CineForm RAW and REDCODE compression, the decision to apply a log or linear curve is a design choice, not a software/hardware limitation. CineForm compression delivers up to 12-bit precision (see info on CineForm 444), so 12-bit linear compression with CineForm RAW is certainly an option. Similarly, REDCODE could have chosen to compress 10-bit log; both algorithms could compress 12-bit log with suitable source data. So while there are marketing advantages to numbers 12 over 10, and similarly some marketing advantage to Log over Linear (too often incorrectly associated with "video"), there are real world quality impacts that we want to explore in more detail.

* For those wanting a refresher on Log vs. Linear vs. Video Gamma, please see this ProLost blog entry by Stu Maschwitz.

There is an assumption in the above paragraph that compression introduces less distortion when applied to the output curve. We see this every time we switch on the television or download a video; the compression is applied to the final color and gamma corrected sequence. An alternative approach might be to encode with one curve, and have the decoder output to another, yet we don't see this much, certainly never in distribution formats. All this gets into the hairy subject of human visual modeling and the suppression of noise in the shadow regions of the image. The reason common curves like 2.2 gamma are applied for distribution derives from the classic book Video Demystified by Keith Jack :

...[has the] advantage in combatting noise, as the eye is approximately equally sensitive to equally relative intensity changes.There is a lot in that short sentence. Starting with the second part about the eye's sensitivity, light that changes intensity from 2 to 4 (this could number candles or photons per unit time) is perceived the same relative to increasing brightness from 50 to 100 - each is perceived as getting approximately twice as bright. Now think of the analog broadcast days, where the channel is very sensitive to noise. Noise is an additive function, so noise of + or - 1 in this example could result in reception of 1 to 5 and 49 to 101. The noise in the brighter image will not be seen, yet the darker values/image are significantly distorted. Gamma-encoding the source (2 to 4) would produce something like 28 to 38, and 50 to 100 would be transmitted as 122 to 167 (using 2.2 gamma.) So even with the same noise added to the gamma-corrected value, the final displayed value (the display device reverses the gamma) would be 1.8 to 4.1 and 49.5 100.5. These resulting numbers greatly improve the darker regions of the image without compromising the highlights.

So what does all this have to with digital image compression? The introduction of compression is equivalent to the additive noise effect I just discussed. While compression artifacts are not as random as in the analog world, they are additive in the same way, so the impact to shadow regions of the image is the same. Some might think you can design compression technology that compresses the shadows less - sounds like a great idea - yet that already exists, adding a curve to pre-emphasize the shadows does exactly this. Let's look at why compression noise is additive; if you don't care about that skip to the next paragraph.

Examining why compression distortion is additive, just like analog noise, requires a base understanding of image compression. Visual compression technologies like DCT and Wavelets divide the pixel data by frequency, where low frequency data is more important to the eye than high frequency data, which is exploited to reduce the amount of data transmitted. The simplest compression example is when we transmit the average values (low frequency = (v1+v2)/2) of adjacent pixels, at full precision, and also transmit the difference of the adjacent pixels (high frequency = (v2-v1)/2) with less precision (compression through quantization). That is the basis of DCT and Wavelets; differences between the two arise in how the low and high frequency values are calculated. Let's reconsider our original bright pairs 2,4 and 50,100; imagine they are adjacent pixel values. Low pass data is (2+4)/2 = 3, and (50+100)/2 = 75; the high pass data is (4-2)/2 = 1, and (100-50)/2 = 25. If we transmit the data with quantization (no lossy compression), the original image can be perfectly reconstructed as 3-1=2, 3+1=4 and 75-25=50, 75+25=100. Now to model compression let's quantize the high frequency components by 2. With decimal points rounded off for compression we get (4-2 )/(2*2) = 0, and (100-50)/(2*2) = 12, now the reconstructed image is 3,3 and 51,99 (e.g. 75-12*2 = 51, 75+12*2 = 99.) All the shadow detail has been lost, yet the highlights are visually lossless. Distortion due to quantization is +/-1 in the shadows and also +/-1 in the highlights - quantization impacts dark and light regions equally, just as in analog noise. Doing the same compression with curved data (28,38 and 122,167) yields reconstructed values as 29,37 and 122,166 which is then displayed as 2.1,3.6 and 50.4,99.2, leaving significantly more shadow detail. This example shows is why compression is typically applied to curved data.

If linear is so poor with shadows, wouldn't the optimum curve have each doubling of light (each stop,) be represented with the same amount of precision? This seems completely reasonable. Instead of storing linear light, each uses the values 2048 to 4095 to represent the last stop of light, so why not divide the available values amongst the number of stops the camera can shoot. Let's say (for simple math) your camera has around 10-stops of latitude, that would place around 400 levels per stop or 100 levels per stop in 10-bit. Now in your creative post-production color grading, it doesn't matter whether you use the top five stops or the bottom five to create a contrasty image - the quality should be the same. It turns out we don't have an ideal world, so while moving compression of the top stop of 2048-4095 down to around 900-1000 (10-bit) is fine, we find that expanding the 10th stop values of 0 to 8 up to 0 to 100, while preserving all the shadow detail, also preserves (beautifully) all the details of sensor noise, which is always present, and which is difficult to compress. It may also be obvious that expanding the "8" value to "100" doesn't gains you 100 discrete levels in the last stop -- for that you would need the currently impossible, 16-bit sensor with about 90dB SNR. So while these new cameras claim 11-stops, don't go digging too far into the shadows.

* NOTE: 400 levels per stop using 12-bit precision sounds 4 times better than using 10-bit. However, once you compress the image the difference mostly go away. To achieve the same data rate the 12-bit encoder has to quantize its data 4 times as much. The 12-bit compression only really starts to pay off with very little compression, say between 5:1 and 2:1.

The curve that is applied is a compromise between compression noise immunity and coupling too much sensor noise into the output signal. As a result there is no standard curve for digital acquisition, as the individual sensor characteristics and bitrate of the acquisition compression all play a part when designing a curve. This goes for the Thompson Viper Filmstream curve recording to an HDCAM-SR deck and also to an SI-2K recording to CineForm RAW -- the curves are different. But as long as the curve is known, it is reversible, allowing linear reconstruction as/when needed. So the curve is just a pre-filter for optimum quality compression.

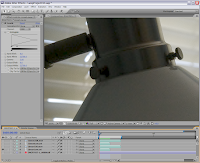

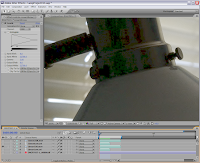

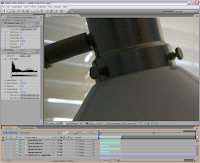

Now for some real world images. To clearly demonstrate this issue I started with an uncompressed image that I shot RAW with my 6MPixels Pentax *IST-D. Using Photoshop I produced a 16-bit linear TIFF source for After Effects. Here is the source image displayed as linear without any gamma correction.

Here the source is corrected with a 2.2 gamma for the display, looking very much like the shooting environment.

To help showcase the shadow distortion, I zoomed in on a dark region that has some worthy detail I then applied a little "creative" addition by increasing the gamma to 3.0 (from 2.2) to enhance the shadow detail.

Below is the same image encoded using linear to 4:4:4 using the worst quality settings for CineForm and JPEG2000. (I used the worst (highest compression) setting to help enhance compression artifacts -- as bitrates increase the artifacts diminish). I would have preferred to only use JPEG2000 (I don't like running CineForm at this low quality), however the AE implementation of J2K via QuickTime is only 8-bit so it introduces banding as well as linear compression issues. You can see there is a different look to JPEG2000 and CineForm when heavily compressed, yet they both show problems with the shadows with a linear source. With CineForm set to Low and JPEG2000 set to 0 (on the quality level) the output compression ranged between 23:1 and 25:1. The images have their linear output corrected to a gamma of 3.0. Click any image to see them at 1:1 scale.

Below is the same image encoded using linear to 4:4:4 using the worst quality settings for CineForm and JPEG2000. (I used the worst (highest compression) setting to help enhance compression artifacts -- as bitrates increase the artifacts diminish). I would have preferred to only use JPEG2000 (I don't like running CineForm at this low quality), however the AE implementation of J2K via QuickTime is only 8-bit so it introduces banding as well as linear compression issues. You can see there is a different look to JPEG2000 and CineForm when heavily compressed, yet they both show problems with the shadows with a linear source. With CineForm set to Low and JPEG2000 set to 0 (on the quality level) the output compression ranged between 23:1 and 25:1. The images have their linear output corrected to a gamma of 3.0. Click any image to see them at 1:1 scale.

While there is plenty of compression artifacts in the dark chrome of the lamp, the white of the lamp shade is showing the noise becoming very blotchy as the natural detail/texture carried in the noise is lost.

While there is plenty of compression artifacts in the dark chrome of the lamp, the white of the lamp shade is showing the noise becoming very blotchy as the natural detail/texture carried in the noise is lost.The images below have a Cineon log curve applied before compression. The Cineon curve, while good for film, is not well optimized for digital sources. It sets the black level to 95 and the white to 685, giving you around 9-bits of curve to cover the 12-bit linear source. Yet even still, the results show the benefit of the log encoding. The images below have their Cineon curve reversed and the Levels filter 3.0 gamma applied.

So designing a log curve that is optimized for the camera's sensor and its compression processing will generate superior shadow quality through compression processing than will linear compression, but without visible impact to the highlights. While the quality on the latter images is superior, it is worth noting that the bitrate didn't change more than a couple of percent up or down. Although it's a different topic, this also demonstrates that compression data rate (only) is not a good indicator of image quality.

So I don't end the pictorial by only showing heavy compression, here are a couple of log encoded screen shots at 8.8:1 and 5.5:1 compressed; notice at these higher data rates the Cineon log coding looks identical to the 16-bit TIFF source. The bottom line is that properly designed log curves optimized for the camera will provide better resulting images than coding linear data.

The case for Linear:

While I have done my best to make the case for log or gamma encoding before compression, are there any advantages to linear coding in general? Firstly, uncompressed 12-bit does contain more tonal information in the highlights without sacrificing shadows. You would likely store the data as a linear 16-bit TIFF sequence, which will provide very large amounts of data to deal with -- this 6MPixel example at 24fps would be 829 MB/s (for 4K images you'll generate 1+GB/s.) If your workflow includes uncompressed 10-bit log DPX files, your data rate is still high at 552 MB/s (768MB/s for 4K) so it might seem the difference is small enough to stick with the 12-bit. While mathematically you have more data you would likely never see the difference.

Just like uncompressed, compressed linear data has more tonal detail in the last couple stops -- so an overexposed image may do well through compression. Linear encoding can be considered as a curve that emphasizes the highlights as far as the human eye is concerned, so there may some shooting conditions where that emphasis is beneficial. Yet overexposing your digital cameras image lends to unwanted clipping; generally for digital acquisition an under-exposure is preferred.

Linear coding also looks great in mathematical models that measure compression distortion. Algorithms like PSNR and even SSIM interpret curves as producing unwanted distortion (when referencing the linear image), even if the results actually look better with a curve applied. This is one reason I'm careful when using only these measurements when tweaking CineForm codec quality as you are in danger of making an image look better to a computer but not to the final viewer.

Linear compression eats shadow noise - that may be perceived as a good thing. Many have discussed in the Red online forums that wavelets can be used as noise reduction filters - that is true. Unfortunately is it not possible to completely separate noise form detail, some detail will be lost though noise filtering. Noise filtering can help with compression, giving the compressor less to do as a means to reduce the data rate. While noise can be added back, lost information cannot. If you can encode the image including the shadow noise, you provide the most flexibility in post as noise can always be filtered later.

Finally, linear coding is a little easier when performing operations like white balance and color matrix as these operations typically occur in the camera before the curve is applied, yet before the compression stage of all traditional cameras: DV, HDV, DVCPRO-HD, HDCAM, etc. All these camera technologies use curves so that compression to 8-bit still provides good results. In the new RAW cameras, white balance and color matrix operations are delayed into the post production environment, which is one of the key reasons that makes RAW acquisition so compelling -- improved flexibility through a wider image latitude. If you apply a curve to aide compression you have to remove that curve before you can correctly do white balancing, saturation and linear operations in compositing tools. Now if the curve is customized for the camera, like Viper Filmstream or SI-2K's log curve, downstream tools may not know how to reverse the curve to allow linear processing. This can be a real workflow concern, so vendors like Thompson provide the curves for Viper Filmstream, and with the SI-2K using CineForm RAW the curve management is handled by the decoder, presenting linear upon request.

Note: For those who want to know the curve used by the SI-2K, it is defined by output = Log base 90 (input*89+1).

While converting curved pixel data back to linear for these fundamental color operation does add a small amount of compute time, this is all under-the-hood in the CineForm RAW workflow. Our goal is working towards the most optimized workflow without sacrificing quality. We view curves as one of the elements required for good compression. The black box of our compression includes the input and output curves as needed. If you consider this whole black box, CineForm RAW supports linear input and linear output, but without linear compression artifacts.

While converting curved pixel data back to linear for these fundamental color operation does add a small amount of compute time, this is all under-the-hood in the CineForm RAW workflow. Our goal is working towards the most optimized workflow without sacrificing quality. We view curves as one of the elements required for good compression. The black box of our compression includes the input and output curves as needed. If you consider this whole black box, CineForm RAW supports linear input and linear output, but without linear compression artifacts.

Wednesday, September 19, 2007

An "Intermediate Codec" -- Is that term valid anymore?

Some of you may have seen our most recent announcement that CineForm compression has been selected for a 300-screen digital theater rollout in India. Please check out the press release on CineForm.com. While we are very excited about participating in this new market, it has put our codec in an unusual position; our software tools now participate in every part of the film production workflow. Acquisition: We are the acquisition format in cameras like Silicon Imaging and soon Weisscam (see Weisscam press release), we are inside digital disk recorders like Wafian, and we are an output format in the upcoming CODEX recorders. Post-Production: We are widely used natively as an online post-production format on the PC and increasingly on Mac, and now Exhibition: the same format used throughout acquisition and post is now beginning to drive digital theater projection at HD, 2K, and hopefully beyond.

CineForm created its compression technology because codecs designed for tape acquisition or fixed-channel distribution were simply not good enough for the visual quality and multi-resolution workflow demands of advanced post-production. We initially became the “intermediate” between the mediums of heavily compressed source formats and heavily compressed distribution formats. But it turns out if you are good at post, you can also be good at some markets for acquisition and distribution. I need to stop saying that codecs designed for acquisition and distribution “suck” for post, as we are now the exception. :) Now, it is still very true that no one compression format is suitable for all markets, so no one will be downloading CineForm for streaming media; H264 is 10 times more efficient for that market. Yet try editing H264 and you will know why CineForm has its market.

The design parameters for CineForm compression have not changed. It is well known in compression circles that of the three design parameters - quality, speed, and size - you can only pick two. CineForm is one of the few to select quality and speed. Acquisition and distribution formats typically choose size first. In the professional acquisition space, size is becoming less important (except you don't want to store 4k uncompressed; even 2k/HD is a burden for many) as hard disk and flash-based recording systems don't limit to 25Mbit/s as DV/HDV tapes have done--file size can now increase to reach your desired quality. Digital theater markets are also very much quality-driven, and less sensitive to size, as today they are already storing between 80 and 250Mb/s for compressed content delivered to the screen. So why did Cinemeta in India select CineForm? CineForm’s delivered quality and speed together result in a systems cost savings. They get CineForm’s acknowledged “quality”, while the “speed” means it can be played back in software on standard PC platforms without HW acceleration that is required for competing solutions.

Tuesday, September 04, 2007

Congratulations to Red

If you haven't heard, Red just shipped their first 25 cameras -- likely the most anticipated camera launch in recent history. During Red's short public existence, there have been many changes in the way people consider their camera purchases, with a far greater choice of resolution, frame-rates, lens mounts, sensor size, format factor and camera designs that actually consider the post-production workflow upon acquisition. Cameras are no longer bound by the existing standards, enabling many new players to enter the market, and while Red isn't the first, it is the most industry-changing to date.

The Red One is yet to approach feature completion, and I haven't gotten to play with a Red camera yet, but the posted images from the first customers are looking nice. Not that we have the ultimate imaging device. I feel the opposite--this is only the start, as there is plenty of room to grow for Red, for the existing players, and for any other startup that wants to give it a go.

Sunday, August 26, 2007

4:4:4:4 and New CineForm Builds

Normally, I don't post here about new software releases, as we do so many with pretty regular one month release cycles, but these new builds have been a while in the works. One issue with being a "middleware" company, as some VCs have called us, is we are a little at the whim of what the big players do. Adobe releases CS3 with lots of under-documented "features" that we now have to work around, new cameras with new formats (seemingly weekly), Sony Vegas revisions (released almost as often as CineForm), Apple FCS major revisions, and, of course, Microsoft Vista (big headache.)

Sometimes, it seems hard to find the time to add features to our products, rather than just patching features of others. Note: sometimes that is to the advantage of CineForm that we can patch features of the bigger guys; we are the glue that makes this stuff work. While there are plenty of patches for the above tools, we did have time to put in some cool new stuff that I didn't want to go unnoticed. In the NEO 2K and Prospect 2K lines there is now extensive support for 4:4:4 with Alpha channels (4:4:4:4), at 12-bits per channel -- this is a first for any visually lossless compression product. While adding 4:4:4 a few months back has a clear market with dual link recording and film-out mastering, 4:4:4:4 was created with pure speculation that there is a market for it (given that that is typically the domain of uncompressed.) I hope that it finds its way into some interesting applications, if so, please tell me how you use it.

The latest versions of the CineForm tools are available here : http://www.cineform.com/products/Downloads/Downloads.htm

Update : The new NEO HD/2K and Prospect HD/2K builds now include a license to the Mac codec. See the press release for details.

Wednesday, August 08, 2007

Our latest movie shot with the SI-2K

Now, there is always a huge story about what went right and wrong when doing the 48 Hour Film Project, and we had lots of both--maybe I will get into that later. Unfortunately, we were not eligible for competition this year, as we submitted our film two hours late (The "50 hour film project"), along with 17 other late teams of the initial 49 (with two not submitting.) We did get selected as one of the films for the "best of" screening, so it was not a total bust, and of course we (mostly) had fun (and stress) making it.

We shot with two SI-2K minis, one production unit with a P/L mount Super-16 zoom and one older prototype with a C-mount Canon TV zoom -- the $50 lens from last year. There will be a whole post with more technical details soon.

This year we got the genre of Fantasy.

Required elements:

Character : Alex Gomm, County Employee

Prop : A spoon

Line of dialogue : "Keep that thing away from me."

To save to your local drive, as it is too big to stream (350MBytes), right click on the download link for a 720p WMV/HD version of Sir Late-a-Lot or here for a poor quality youtube version.

P.S. If you want to see what we did last year, here is the youtube.com and archive.org HD links to Burnin' Love .

Thursday, July 26, 2007

Canon HV20 - 24p or not?

Yes, it is 24p.

Got that out of the way. It seems that there have been a bunch of forum threads attacking Canon, saying that this awesome little camera doesn't really shoot 24p. Not that misinformation is unusual for the internet, however, these posts often quote me or CineForm as backing this position. Neither myself nor CineForm support these posts or claims.

The problem arose when I did state that there can be a subtle issue for chroma keying when using any 4:2:0 24p signal encoded into 60i. Some users took that and ran with it. I had seen some of this in customer footage, nothing I have shot. I probably wouldn't have mentioned anything other that it is a another selling point to using the HDMI output from these new cameras (which is damn cool), and I'm a video geek. Since then I'm not even sure this issue exists outside of MPEG compression artifacts, as some more recent burrowed footage looks great. I have so little HV20 footage to work from that we shoot ourselves -- Canon, we need a camera longer than a few days -- I want one to take home :).

Basically, the 24p signal is good, and the CineForm pulldown from 1080i60 HDV tape works perfectly. That is my position.

Here is some 24P extracted footage from a friend's HV20 as she was documenting some behind the scenes footage for our 48 Hour Film Project shoot -- the camera pictured is a Silicon Imaging 2K. We intercut the HV20 footage into the credits of this movie and presented it at 1080p24 on a Sony 4K projector. The linked clip is a 110MB 1440x1080 CineForm AVI, so if you need a CineForm decoder for your PC (Mac version coming), you can download one for free from CineForm.com.

P.S. Other HV20 misinformation : when recording the Canon HV20 to tape, the image is 1440x1080, that is the HDV standard used. It is not 1920x1080, you only get that out of the HDMI port, and even then the image is likely upsized from an internal 1440x1080 image (which is still very nice.) The 1920x1080 native image is only available in the still camera mode.

Wednesday, July 25, 2007

Digital Media Net interview

David Basulto of www.filmmakingcentral.com and now digitalmedianet.com, did an interview with me a week or so back. This covers some of the basics of what CineForm is all about and what we are trying to achieve. You can listen to the full interview here: videoediting.digitalmedianet.com/articles/viewarticle.jsp?id=163809. Unfortunately, the Skype recording of the interview mixed the two voices as if we were stepping on the end of each others sentences (we weren't.)

Friday, July 20, 2007

Mastering Digital Cinema

The crew at CineForm has been very busy over the last four days. As intended, we trans-coded and up-converted all the 48 Hour Film submissions for the San Diego division of this international film festival. We had a total of 47 entries (including our own) and nearly 47 different video formats, compressions types, frame rates, frame resolutions and aspect ratios. This was a bigger can of worms than I had predicted, as filmmakers/editors in a rush make a lot of mistakes. CineForm, as a major local sponsor, wanted all the films to look their best when output on the Sony 4K projector, yet the conditions of this competition--think Iron Chef for filmmakers--does conspire against our goals to make the pictures really pretty. That said, many of the pictures did look awesome, and basically every film was improved by our up-conversion, resulting in many happy filmmakers. The audiences cheered and clapped each time our tag was shown, but even more enthusiastically when shown the second time at the end of each night's screening (that felt nice.) Thank you Geoff Pepos of Rogue Arts for making our 15-second tag -- I hope to post that for others to see soon.

The project was presented by a portable Wafian DDR, the HR-F1. This makes a very nice playback server now that there is a play-list feature (that feature is still beta.) In each session, there were around 10 films, with 5-6 other reels for 48 Hour promotion materials and other sponsor advertisements. The Wafian box performed flawlessly.

Here is a cross section of the formats we had to deal with :

DV 60i, 24p, 24pA, mixed 24p/24pA/60i, in 4x3, 4x3 letter boxed, 16x9, mixed aspect ratio (some not intentional), wrapped in MOV or AVIs, recoded to H.264 (at the horror of 256mb/s). We had Apple Intermediate codec encodes as 30p (we said no to 30p as this was for a 24p presentation, yet we had 3-4 films submitted that way), we had a couple of ProRes movies, and range of 720p24 DVCPRO-HD materal, some coded at 60p . We had a surprising number of clips mastered at 24.000 and 30.000 fps -- how or why they did this, I have no idea -- the cameras were standard 23.976/29.97 shooters. Very little 1080p at 23.976, our presentation format, four at most (our own film being one of them.) That was picture data; audio was a whole new set of issues. Converting it all was actually a lot of fun.

We learned a lot during this process, and I expect there will be several enhancements made to our own products to ease the conversion for a wide range of source material. We actually produced code over these last few days to deal with pulldown and framing issues we saw.

Some take-aways for filmmakers entering these competitions:

1) Choose a video format before you start shooting and stick with it. And for 24p presentation don't mix 60i and 24p sources.

2) Don't letterbox a 16x9 movie into a 4x3 frame; that just wastes resolution -- I know some cameras force you to do it and this is actually recommended by the 48 Hour Film web site. This causes another issue in you have a 16x9 sources and render to 4x3, any re-frame (zooming into a shot) will make your letterboxing size change between scenes -- there were 2 or 3 films with that issue.

3) Stop the lens down, or use neutral density filters; many movies had large, clipped white regions that could have been avoided to achieve a more filmic look.

4) If you want a really dark look, still light your scene well and make your film dark in post; we saw lot of source compression artifacts in the shadows of dark films.

5) Basically for items 3-4 there is only a small range of light that works well for most low cost video cameras; try for the camera's sweet spot. That might mean you need to gel your windows for interior shoots.

6) Don't use heavy compression on your output, don't export to MPEG2, MPEG4 etc. for your deliverable -- use that for your YouTube uploads.

7) Shoot HD 24p if you can, as it looks great on the big screen.

This was an awesome experience and I hope we get the opportunity again.

Thursday, July 12, 2007

24p is in most cases 23.98, or 23.976 or 24000/1001 fps.

Sorry for the weird title, but I have just received that question twice in ten minutes from 48 Hour Film Project contestants. In my last post, I said we are presenting in 1080p24, and we would prefer 24p submissions. The problem is 24.000p is likely not available on 98% of the cameras being entered into the competition (the 2% that can shoot 24.000p are the two Silicon Imaging cameras used by our team -- unless some of the Red guys are entering with a prototype--that would be fun.) The rest shoot what is commonly called 23.98, but I prefer 23.976 (it matters sometimes in AE), but it is really 24000 divided 1001 frames per second or 23.9760239760... So when I said 24p, I really mean 23.976...p -- sorry for the confusion. Even our SI-2K cameras are set to 23.976. 23.976 is the new 24p. Now if you can shoot or edit 24.000, that is fine, too; just never mix 23.976 and 24.0; you get this nasty frame ghosting due to the frame blending in most NLEs. In our more recent software, we pop-up a warning when you add 24.0 to 23.976 timeline (or vice versa.) So if you have a 24.0 camera with a 24.0 timeline, we will re-master it to 23.976 for projection.

Why this weird number : 23.976 (and its direct cousin 29.97?) It goes back to when someone thought it was a good idea to add color to television. They needed to shift the frame-rate slightly so the new "NTSC" color sub-carrier didn't freak out the existing black and white TVs. Now we are stuck with it, and it still works on your mid-century B/W set (but where is the HDMI connector?)

Monday, July 02, 2007

Mastering your short for theatrical presentation.

While this post is aimed squarely at those doing films in the San Diego division of the 48 hour Film Project (yes, many of my posts will be on this topic for a while), I hope this information is general enough for anyone producing a short/feature for HD presentation.

As CineForm will be re-mastering all 50 films for their theatrical presentation starting two days later, we had to determine the best way to handle the range of input formats without degrading the overall presentation quality. Years of seeing my own HD work crushed to letterboxed SD drove this years CineForm involvement. While projecting HD as HD and SD and SD is simple enough, we found in our theater tests that the projector format-switching introduces unwanted garbage on the screen as the projector re-syncs -- one of the projectors even required realigning the display after each format switch (although not the Sony 4k.) With common format ranging form SD 60i, SD 24p, 720p, 1080i60 and 1080p24, we were in for a lot of projector switching. For this reason, we are re-mastering all submissions to 1920x1080 and projecting at 24 frames per second, i.e. more work for CineForm.

As 24p is not perfect, it is a good idea for filmmakers to consider its minor limitations. It is best to avoid excessive camera motion, particularly if you are shooting 60i SD or HDV, which is more forgiving of camera movement. In most situations, cameras on tripods or with slow camera movements typically translate better to the big screen. Also, as the presentation is 16x9 wide-screen, use it if your camera supports this mode. If you are using letterbox on a 4x3 camera, we will scale the active picture to fill the screen. Full screen 4x3 shorts will be kept in their original format; the source aspect ratio will be preserved for all content.

Clearly, 24p sources work the best; however, converting 60i to 24p is not too difficult to achieve nice results. However, 30p or "frame mode" common on many cameras should be avoided, as that doesn't convert to 24p well.

Most of the above advice is pretty straight forward and standard, but then I considered the number for very nice 24p sources (like Sony's V1U and Canon's HV20) that are not supported within popular NLEs (e.g. Apple FCP.) 24p editing on a 60i timeline is far more common than it should be, and just like interlace video, it should be outlawed. :) The issue is that each 24p clip on a 60i timeline will not have the same "cadence;" with each cut potentially making sequences with half frame lengths, making a good 24p master is nearly impossible, and that is not even considering what happens with dissolves. Clearly, if you can edit 24p on a 24p timeline, do it! Fortunately, CineForm customers don't have this issue, but I couldn't recommend that all filmmakers learn new software for this project. So at first I though we couldn't support bad cadence 24p (in 60i), particularly with a very short time-frame to remaster so many films, but then I saw it as an opportunity to upgrade our internal 60i to 24p algorithms to handle this craziness. That is what I've been working on this weekend, and the San Diego audience will see it in action in two weeks and see that it works pretty well.

So, in order of preference :

* shoot HD 24p edit in 24p (render out as 24p or as 24p with pulldown.)

* shoot HD 24p edit in 60i (render out as 60i)

* shoot HD 60i

* shoot DV 24pA edit in 24pA (render as DV 24pA)

* shoot DV 24p edit in 60i (render as DV 60i)

* shoot DV standard 60i edit in 60i

Please avoid 30p/60p (of course these are fine for slow-motion, but speed change them to 24p.)

Have I missed anything? Please ask any questions in the comments section.

Monday, June 25, 2007

CineForm projecting to Sony 4K went very well.

With less than three weeks until the 48 Hour Film Project competition, we carried out tests today with a portable Wafian recorder acting as a digital cinema playback server to the Sony 4K projector. The results were stunning. The Wafian HR-F1 is a very convenient way to do HD presentation, using this tiny form-factor to deliver 1080 24PsF over HDSDI to the Sony 4K projector. We played a range of scanned film and SI-2K source material, all looked great. But as we are effectively renting the theater for these tests, I was able to do things that may not be very acceptable at $10 per movie ticket, like standing 2' from the 34'+ screen looking at the pixel structure of the image -- something I always want to do with digital projection (with film I don't need to see the grain that close.) At 4K, even the first row can't see the pixels -- this is a nice projection format. I'm hoping we have many HD+ submissions for this competition, as we want to help SD projection come to an end ASAP.

Thursday, June 14, 2007

A CineForm first : driving the 48 Hour Film Project to HD and beyond

For each year the 48 Hour Film Project has come to San Diego, CineForm has entered a team; we even won a prize last year (see the full story for 2006.) After each event, we can't help thinking that CineForm needs to sponsor this competition, as these cutting edge indie filmmakers are our target market. But as we lack the funding of past heavy weight sponsors Avid and Panasonic, we had to be inventive.

This year, we are making the San Diego division of the 48 Hour Film Project the coolest of the 60+ international city circuit, by being the first city to present HD by providing services in exchange for CineForm sponsorship. CineForm is providing all the back-end format conversion and mastering of all 40 to 50 films to 1080p HD, all within 2-3 days of the competition's completion. Then CineForm, with the help of Wafian, is providing the projection server and staffing to present all the submissions on a Sony 4K projector at the Hillcrest Landmark theatres. To upconvert 50 short films from a huge range of formats to 1080p for projection is a marathon project in itself, plus we'll be entering our own team two days before, so we'll be flat out for few days.

Why are we doing this? Because we can. With our tools now being cross platform and CineForm being an ideal project format (yes, we have a digital theatre group adopting CineForm over the more pricey JPEG2000) we have all the tools to pull this off. Plus, it's going to be a lot of fun, particularly to see our work on a 4K screen.

If you are an HD indie filmmaker and you're in or near the San Diego area, you should consider joining this competition to see your work in its full resolution on the big screen. Sign up while you can.

Friday, June 01, 2007

CineForm RAW interview at NAB '07

Digital Production BuZZ has posted an interview between Jim Mathers and myself that occurred at this past NAB. While I never feel completely comfortable when someone points a camera and mike at me and says "Tell us about all this," I think it came out okay. My monologue covers the beginnings of CineForm RAW for digital cinema acquisition to today's integrated 3D look system within the SI-2K cameras. My section is only 9 minutes and it can be found at the 20m:20s mark of the podcast.

Here is the direct link to the mp3 of the interview. Also, check out the complete show notes for the entire podcast.

Tuesday, May 29, 2007

Silicon Imaging demo online

Jason Rodriguez of Silicon Imaging has posted a excellent demo of the user interface controlling the SI-2K digital cinema camera. It shows some of the advantages in using an embedded Intel CPU with a video camera. Click the image to jump to the demo.

Note: when he is talking about compression level, and the display says "Very High," he is actually talking about the quality level. Very high quality and very high compression tend to have opposite meanings. :) At the "Very High" setting, the compression is a light 5:1.![]()

Wednesday, April 25, 2007

CineForm 444 vs HDCAM-SR revisited

During NAB, I had an honored visitor to the CineForm booth, Hugo Gaggiono, CTO of Sony's Broadcast Production System Division. Unfortunately, I was not there to meet him, so he spent time with Jeff Youel of Wafian discussing our recent test with HDCAM-SR, and how we were able to achieve the results we did (the reason for his visit.) I later tried to follow up with Hugo at the Sony booth, but I had no luck with paging him. Pity, as I now had data as to why CineForm did so well against his format.

That same day, I answered the challenge for an outsider (Russell Branch of InnoMedia Systems Ltd.) to evaluate the test materials comparing CineForm to HDCAM-SR. Russell had been following the discussion on the Cinematographer's Mailing List (CML) regarding this experiment, and kindly offered to perform the quality measuring tests himself. It's likely Russell had access to  the OmniTech picture quality anaylsis device, and along with some help from the OmniTech guys we ran a DVD full of frames through their analysis. Their conclusion was very similar to our own, and it also showed the HDCAM-SR doing worse on the StEM, just as we had found. The main difference in this test (other than the OmniTech being much faster than my own analysis), was that the OmniTech device could show us why SR's quality dropped for StEM footage.

the OmniTech picture quality anaylsis device, and along with some help from the OmniTech guys we ran a DVD full of frames through their analysis. Their conclusion was very similar to our own, and it also showed the HDCAM-SR doing worse on the StEM, just as we had found. The main difference in this test (other than the OmniTech being much faster than my own analysis), was that the OmniTech device could show us why SR's quality dropped for StEM footage.

Often for testing CineForm I would gain up the difference (between compressed and the source) to show what has changed during the encoding.  This photo is the OmniTech showing that very difference, subtracting the CineForm compressed images from the uncompressed source, revealing an effectively grey frame (no significant differences.) However, I never did this test with HDCAM-SR; after all, I can't fix any issues I might find, so the test never occurred to me. The OmniTech operator had no such issues; he didn't know what compression he was testing and was therefore completely unbiased (sorry, I didn't write down his name.) When he flipped on the differencing, there was a distinct image within the frame -- he even suspected it was HDCAM-SR based on the images revealed in the differencing.

This photo is the OmniTech showing that very difference, subtracting the CineForm compressed images from the uncompressed source, revealing an effectively grey frame (no significant differences.) However, I never did this test with HDCAM-SR; after all, I can't fix any issues I might find, so the test never occurred to me. The OmniTech operator had no such issues; he didn't know what compression he was testing and was therefore completely unbiased (sorry, I didn't write down his name.) When he flipped on the differencing, there was a distinct image within the frame -- he even suspected it was HDCAM-SR based on the images revealed in the differencing.  The brighter image parts of the display, indicating a larger encoding error, were the black regions of the source image. It turns out the Sony deck will not encode the very lowest values of black. I must quickly note that a standard broadcast signal will not contain these black levels and the Viper footage didn't either, and that is why SR is closer to the CineForm quality for the live footage. This black level issue will only come up for content meant for theatrical presentation--a film-out master, just like the StEM footage if designed to stress test. Now the amount of black truncation is not huge, but enough to explain the 2-3 dB drop in measured quality performance. Can anyone tell me why the whole 0 to 1023 range isn't recorded when the same range is valid over HDSDI/dual link?

The brighter image parts of the display, indicating a larger encoding error, were the black regions of the source image. It turns out the Sony deck will not encode the very lowest values of black. I must quickly note that a standard broadcast signal will not contain these black levels and the Viper footage didn't either, and that is why SR is closer to the CineForm quality for the live footage. This black level issue will only come up for content meant for theatrical presentation--a film-out master, just like the StEM footage if designed to stress test. Now the amount of black truncation is not huge, but enough to explain the 2-3 dB drop in measured quality performance. Can anyone tell me why the whole 0 to 1023 range isn't recorded when the same range is valid over HDSDI/dual link?

While the original tests are valid, if we accounted for HDCAM-SR black levels by limiting the range of the source material, the difference would be less significant. Our original goal for these tests was to prove a software wavelet compressor can be as good as the best and most widely accepting compression solution. This we have achieved. But I will also acknowledge that with the two formats so close in quality, there will be some sequences that will favor SR over CineForm. I leave it up to others to find those sequences for their own comparisons. ;)

update April 30, 2007: I was just informed that it is a dual link HDSDI, not HDCAM-SR, that limits the valid range to only 4-1019. As we were only testing a file based workflow with CineForm 444, I re-ran the PSNR analysis only using the 4-1019 data range by clamping the extreme values. Surprisingly I found the PSNR numbers for SR only moved up a very small amount, far less than the 2-3dB I had predicted. The highly detailed StEM footage does seem to favor CineForm, likely due to our VBR nature versus the SR's CBR design, and the black levels where just a red herring.

update May 12, 2007: I have recently learned that the OminTek operator was Mike Hodson, the company founder and designer of the OmniTek PQA. So the testing was in very good hands.

Thursday, April 19, 2007

NAB Stuff -- CML Party

I'm back from NAB with a lot of exciting work to do -- funny how we are so busy to prepare for this show, only to have a brand new list of tasks to complete immediately after it. I skipped the last day, which is typically quieter, to get a head start on my to-do list. The show was exhausting as usual, but all indications are a very positive experience for CineForm and out booth partners (Wafian & Silicon Imaging.) The important thing was I was there long enough to attend the CML party.

I'm back from NAB with a lot of exciting work to do -- funny how we are so busy to prepare for this show, only to have a brand new list of tasks to complete immediately after it. I skipped the last day, which is typically quieter, to get a head start on my to-do list. The show was exhausting as usual, but all indications are a very positive experience for CineForm and out booth partners (Wafian & Silicon Imaging.) The important thing was I was there long enough to attend the CML party. This party is a geekfest of cameras in a shootout, plus beer and pizza. Thanks are well-deserved to Scott Billups and Geoff Boyle for organizing the event. There were cameras from Panavision, Sony,Panasonic, Silicon Imaging, Phantom & Canon (HV20 -- no joke was in the shoot-out vs a Phantom 65 and Genesis.) Capture gear was provided by Rave HD, Wafian and Codex. Pictures tell a lot.

This party is a geekfest of cameras in a shootout, plus beer and pizza. Thanks are well-deserved to Scott Billups and Geoff Boyle for organizing the event. There were cameras from Panavision, Sony,Panasonic, Silicon Imaging, Phantom & Canon (HV20 -- no joke was in the shoot-out vs a Phantom 65 and Genesis.) Capture gear was provided by Rave HD, Wafian and Codex. Pictures tell a lot.![]()

![]()

Sunday, April 15, 2007

Apple catching on, proves CineForm right.

Several years ago, CineForm attempted to sell Apple on the idea of a digital intermediate codec for professional post. Unfortunately, back then they still had their heads stuck firmly in the DV sand, and believed DVCPRO-HD would be sufficient, which everyone today knows it isn't. With the announcement of Apple ProRes 422, it seems the CineForm message has finally taken some hold, but it seems only as far as offering an equivalent to Avid's DNxHD (good for CineForm.) It seems to be a DCT codec with nearly identical data rates to the Avid codec, 1080p24 is quoted at 22 MB/s or 176Mb/s (same as Avid.) It might be wishful thinking if the Apple codec is a CBR design like Avid's; I guess we will have to wait and see. Funny thing, with all our recent work on a 4:4:4 codec, it's like we knew this was coming. :)

Thursday, April 12, 2007

First new SI-2K and SI-2K Mini images.

Things have changed a lot over at Silicon Imaging, check out the new body and styling of the SI-2K as designed by P+S Technik. See their press releases regard their partnership with P+S Technik and integrated Iridas SpeedGrade system.

Even the Mini's styling got an overhaul, but that interchange P+S Technik lens mount is sweet. With adaptors you can place lens of these types : PL, B4, Nikon F, Canon EF, Panavision PV, C mount, and some I haven't heard of.

Tuesday, April 10, 2007

AVCHD support

Switching gears from proving 4K and 2K are effectively the same, to something totally consumer -- AVCHD. This entry level HD acquisition format as been troubling editors/hobbiest for a while now. You may have thought M2T (HDV) was hard to edit, but try working with AVCHD, the MPEG4 long GOP hardly even plays on most PCs. We know AVCHD to CineForm file conversion was going to the best way to edit this stuff, it just a matter of finding a suitable decoder. After 4+ months of searching and trying to license components from companies that will not return any calls, I just found a new decoder/player that works great with HDLink (included in all our PC tools.)

Now this player / AVC decoder is a little pricy at $50, but to make a $1000+ camera editable, it is an excellent deal. Here is the link : Player + AVC Plugin. (You need both the player and AVC PlugIn.) If even works with the demo version (which adds a small logo) and with our trial version (which doesn't add any logos.)Once installed you can use the current HDLink to create CineForm AVIs with small trick -- rename the AVCHD files from *.m2ts to *.avchd (or something random.) HDLink mistakes the M2TS for a regular M2T file otherwise, we will fix this in the next release. In the meantime you can edit your AVCHD footage in real-time without issue now.

Update 07/07/07:All our products now support *.m2ts files, however you still need a valid AVDHD decoder to do the file conversions. You can test if you have the right decoders install by trying to play the *.m2ts files in window media player (when that works the CineForm conversion will work.)

Please also see CineForm's tech. note.

Saturday, April 07, 2007

Myth-busting : 4K vs 2K

I've be recently watching a few episodes of Mythbusters after my father said it was fun, and it is. So I figure that's what I been trying to do here, bust the myths like compression can't be used in post, or in this post measure the real difference between 4K and 2K aquisition; I just don't get to blow anything up.

This is not an blind image comparison test like I've done previously, after all we know 4K is better than 2K -- right? Instead I will investigate how much better 4K is than 2K under normal export scenarios. This is not so much a SI-2K vs Red One comparison, rather it is practical look at what happens when mixing camera sources or mixing shooting modes from one camera (all these bayer cameras tend to shot at a lower resolution when overcranked.) Also for anyone shooting with B4 or 16mm lens, you are only going to get 2K or maybe just 1080p, so it will be good to know what you are missing out on. For those who question my bias, yes we have licensed CineForm RAW compression (non-exclusively) to Silicon Imaging for their SI-2K camera, however CineForm is marketing our solution to all RAW shooters from Arri D20, Dalsa Origin and Red One users. We hope to benefit no matter what resolution you shot with.

Let's be real here, for today's delivery format will not have people finishing their productions at 4K (I doubt even 1% of 4K acquisitions will be finished at 4K 4:4:4), for those getting a filmout, they are typically done at 2K, even digital mastering for D-cinema is mostly 2K or 1080p for most E-Cinema and HD masters. So the 4K image is primarily going to buy you some flexibility for re-framing, the over-sampling will help clean up noisy sources, and ease pulling of keys, certainly a good thing. But assuming you have lit your project well and framed correctly in camera, how much difference is visible between shooting 4K vs 2k?

For this test I have a 4K sequence from a Dalsa Origin (4096x2048), I could do the same from any of the high resolution sources like Red One or Arri D20. To simulate the same sequence shot at 2K RAW took a little work. The original 4K RAW frame was compressed into a CineForm RAW AVI, this AVI was loaded into AfterEffects (the CineForm compression stage was needed as AfterEffect will not load the RAW DPX file from Dalsa, but as the compression is very light, this will not impact anything.) The AVI was placed into a 4K composite, in which I added a blur filter to simulate the effect of a optical low pass filter (OLPF,) which would be used on any decent bayer camera. Working out the amount of blur was tricky, more on that later. The 4K After Effects composite with the blur was then exported as a 2K DPX (RGB 4:4:4) sequence. As RAW bayer only has one chroma sample per pixel, not three like 4:4:4, this DPX sequence was then sub-sampled into a 2K RAW sequence (one third the size.) This new RAW sequence was encoded into a 2K CineForm RAW file, nearly a quarter the size of the original CineForm RAW 4K AVI (only 10MBytes/s.) The next step was to place both the original CineForm RAW 4K sequence and the derived 2K RAW sequence into a 2048x1024 project running Prospect 2K under Adobe Premiere. You will be able to see timeline playback and mixing of 4K and 2K in real-time at our NAB booth (SL7826) on mid-range PCs (any modern core dual Intel system.) I set the demosaicing quality to the best and then visually compared the look of 4K vs 2K. Oopss, something wasn't right.

The first thing I noticed was there was some color fringing in the 2K image, which you don't see on a real 2K shoot, yet the 4k look great scaled to 2k within Premiere. It turns out my guess for the blur level to simulate the OLPF was wrong -- too little and you get image aliasing, too much you get a blurry image. So a went back to composite and produced a range of sequences with different OLPF strengths, to get an image that wasn't overly blurred or aliased, just like a correctly configured camera.

The results of my test sequence is shown in these frames:

The CineForm RAW 4K exported as 2048x1024 (click on image to see a 1:1 scale)

The CineForm RAW 2K simultation exported as 2048x1024 (click on image to see a 1:1 scale)

There is a mild softening in the 2K RAW source, the difference is not massive, my guess is about a 10% reduction in something (frequency response maybe, but I wasn't directly measuring this.) However this 2K image is unsharpened, showing signs of the OLPF, so the next image adds a little sharpening, just like a regular camera (quick and dirty -- I didn't spend much time on this.)

The 2K RAW sharpened export as 2048x1024 is starting to look much more like to 4K downscale. (click on image to see a 1:1 scale)

As expected 4K still has an edge, but for practical workflows it is not going to greatly impact the results for a typical shoot. There will be no issues in mixing 4K and 2K sequences with your projects, your audience will not be able to tell. This test also shows how well a bayer "raw" image can maintain the look of a 4:4:4 image at the same resolution. 4K RAW downscaled to 2K gives a very good 4:4:4 source, and 2K RAW does a pretty good job of maintaining the 4:4:4 quality. For those who are still budgetting for 4K masters and 4K projection, make sure your audience sits in the front few rows. :)

Tuesday, April 03, 2007

Iridas working with CineForm

We have been squeezing in some work for Iridas in this tight pre-NAB time frame. We weren't going to find the time for a related press release, but thankfully Iridas had the time.

http://www.iridas.com/press/pr/20070403.html

Monday, April 02, 2007

Mastering to HDCAM-SR vs CineForm 444

HDCAM-SR has found its way into to very important parts of the market, on-set capturing and final mastering. The quality is very high so it is clearly suitable for both. In our previous tests we compared CineForm 444 and HDCAM-SR as an on-set recording format. In this test we compared the performance in mastering. Our source material was 580 frames of the Magic Hour sequence from the Standard test Evaluation Material (StEM) which is used for DCI compliance testing. The footage is very demanding on compression, with a lot of film grain, complex motion and significant detail within each frame. We played an uncompressed 16x9 version out to the Sony SRW1 deck in 440 and 880Mb/s modes, and compared those to range of quality modes offered by the CineForm 444 codec. As the 880Mb/s mode is designed for two camera shooting or operating at 60p, it is really overkill for a single stream of 24p content, so we added CineForm Filmscan2 to the testing mix (our overkill mode.)

Our source material was 580 frames of the Magic Hour sequence from the Standard test Evaluation Material (StEM) which is used for DCI compliance testing. The footage is very demanding on compression, with a lot of film grain, complex motion and significant detail within each frame. We played an uncompressed 16x9 version out to the Sony SRW1 deck in 440 and 880Mb/s modes, and compared those to range of quality modes offered by the CineForm 444 codec. As the 880Mb/s mode is designed for two camera shooting or operating at 60p, it is really overkill for a single stream of 24p content, so we added CineForm Filmscan2 to the testing mix (our overkill mode.)

The graph says a lot (click to see a larger image):

The large swing in the SR PSNR quality measurements is true to the constant bit-rate codec design, all tape based or fixed rate codecs have this nature. Whereas the CineForm 444 variable bit-rate maintains a mostly constant quality. The 880 Mb/s mode didn't do as well as I expected; doubling the SR bit-rate didn't buy very much. The graphs below compares the 440 and 880 mode for their color channel performance:

The odd element is the red channel's performance in 880Mb/s mode, it should not should have been so much better than green or blue (first 200 frames.) It does in fact appear that the RGB channels are swapped, as if the dual link cables were interchanged for this test, yet they are fine for the 440Mb tests. I'm curious if anyone knows whether the SRW1 will even operate with the dual link cables reversed, or whether this a byproduct of using the 880 (HQ) mode on a single camera stream. We did perform the 880Mb/s test after all other camera and deck testing at 440Mb/s, so an error may have been made when configuring for this test.

The graphs comparing Filmscan1 and Filmscan2 (in keying modes) shows a predictable increase in PSNR quality over all channels equally.

At some point I would like to investigate the 880 mode again, mainly for curiosity, as it is not commonly used (only the SRW1 deck currently supports it.) The SRW1 is a mobile recorder, it is not used for mastering, all the studio decks only support 440Mb/s. At the standard rate of HDCAM-SR, CineForm 444 maintained a higher quality throughout the entire sequence, no mater which CineForm quality setting was selected during the test.

At some point I would like to investigate the 880 mode again, mainly for curiosity, as it is not commonly used (only the SRW1 deck currently supports it.) The SRW1 is a mobile recorder, it is not used for mastering, all the studio decks only support 440Mb/s. At the standard rate of HDCAM-SR, CineForm 444 maintained a higher quality throughout the entire sequence, no mater which CineForm quality setting was selected during the test.

The implications are simple, the digital file based delivery of content is not compromising quality versus tape based mastering and delivery. An entire two hour feature can be mastered to a signal 350GB harddrive, for long term archive (long shelf life than tape), lossless duplication for storage redundancy and data migration, and simple delivery for film-out or mastering to other delivery formats (the drive will cost less than the tape(s).) Tape is still the dominate solution, but as the production world moves towards IT solutions, it is nice to know disk based compression can deliver the goods. For those uncompressed fans, the same feature would consume 1.7TBs, still do-able just less convenient.

Tuesday, March 27, 2007

Codec Concatenation

This was a post I main on the cinematography mailing list, I though it would be approxiate to reproduce here.

They were discussing the use of compression upon ingest, somewhat generated by our HDCAM SR vs CineForm vs uncompressed Viper tests. The discussion moved on from acquisition to post issues of compression concatenation. It seems that most now accept compression at the ingest stage as long as an uncompressed/lossless workflow follows. Certainly I would agree the acquisition compression is so light it is not going to impact the final output -- see the first posting on the results for the HDCAM SR vs CineForm tests.

Let's examine compression concatenation. We understand that concatenation between differing codecs is worse than encoding to the same codec. When compressing to the same codec as your source, a well designed codec will settle, high frequency quantization can't lose the same data twice. If the first generation is good, the subsequent generations will also be good.

Using a frame from the outdoor sequence

Standard quality first generation SR 49.48dB

Standard quality first generation CineForm 50.53dB (higher number is better)

PSNR from gen1 to gen2

Standard quality first generation CineForm 68.97dB (it settled)

The difference from source to second generation 50.48dB

So only a 0.05dB difference after an additional generation. It will take well over 10 generations for the PSNR of CineForm to be equivalent of the first generation HDCAM-SR. Yes this is a good argument for sticking with one post ready codec through-out your workflow. We experienced this three years ago when working on Dust to Glory with an early beta version of the CineForm codec; the post went through 6 or 7 generations before film-out, it still looked beautiful. In this project, much of the source was HDCAM (as well as 16 and 35mm,) so there was also a cross codec concatenation that didn't impact the final results. The reason for this is headroom. Codecs designed for post are not trying to encode the image at the smallest data rate possible, where they fall apart on the second of third generation, instead there is quality to spare to handle concatenation issues.

Here is want happens from SR to CineForm codec concatenation.

Uncompressed to SR - 49.48dB

Uncompressed to CineForm FS1 - 50.53dB

Uncompressed to CineForm FS2 - 53.10dB

SR to CineForm FS1 - 50.54dB

SR to CineForm FS2 - 53.13dB

Uncompressed to SR to CineForm FS1 - 47.12

Uncompressed to SR to CineForm FS2 - 48.02

First note that SR to CineForm is the same distortion as Uncompressed to CineForm, this means that nothing nasty is happening with the source being SR compressed, same goes for output from a CineForm project to SR. Now the cross codec conversion means there is no settling, resulting in a 1 to 2dB loss (depending on encoding mode) when going from uncompressed to SR to CineForm, further generations to the same codec will settle to an approximate 0.05dB loss per generation. Using the lowest number 47dB PSNR, let's see if that is sufficient quality. When compared to the frame to frame PSNR of 40dB, that still means we have very approximately 7dB of headroom (yes that logic will drive the image scientist nuts -- yet this is also from years of testing.) If you going between wavelet and SR five or mores time you might see issues, but otherwise you are safe. Anyone using an IT workflow will never have such cross codec issues.

Again lossless/uncompressed is the most robust workflow, but dismissing lossy compression without a fully understanding how little loss there is, could be throwing money away.

Monday, March 26, 2007

Quality results and the Green Screen Challange

For those of you dying to know, here are the results for the Green Screen Challenge:

| Viper 1 - CineForm in Filmscan1* mode with red and blue channels quantized more than green. Viper 2 - HDCAM SR. Viper 3 - Uncompressed. Viper 4 - CineForm in Filmscan1* mode with all chroma channels quantized equally. | * Filmscan1 is the second highest quality, Filmscan 2 may have lead to data rates higher than HDCAM SR so it wasn't used in the test -- FS1 is an excellent acquisition quality. |

Now before you say with hindsight -- "well that was obvious" -- it was not for those who entered the challenge.

Here are the stats from the entrants :

25% said the images all looked identical.

25% said the first CineForm clip was uncompressed.

37% said the HDCAM-SR clip was uncompressed.

25% found the uncompressed clip correctly (one user changed his mind after a week and got it correct the second time.)

As few selected Viper4 as uncompressed, I believe this is an indicator that people are likely looking for noise signatures, more being better. Viper4 has the least distortion of all three compression types, yet as it is wavelet based no mosquito noise is added, so it didn't stand out as the noisiest or the most filtered. HDCAM SR got some love, which is likely due to DCT tending to add some (in this case tiny) high frequency noise/distortion. So the slightly noisier clips got the most votes, followed equally by the most filtered or uncompressed (i.e. the two extremes.) But overall the statistics can only conclude it is very difficult to tell uncompressed from light lossy compression, but at these high data-rates damaging compression artifacts characteristic of lower bit-rate distribution codecs are simply not visible.

Now for hard numbers on quality.

We ran multiple shoot tests with the Viper in Filmstream mode, charts, green-screens and one outdoor sequence. In all tests the CineForm modes out performed the SR deck in most common 440Mb/s mode (we also testing 880Mb/s -- this data going in a follow-up post.) Let's look at the PSNR statistics for the outdoor sequence (a frame is pictured here.)

This sequence had the Viper stationary as our "actor" (David Taylor) walked towards the camera.

This sequence had the Viper stationary as our "actor" (David Taylor) walked towards the camera.The first three charts show the changing PSNR numbers throughout the 10 second sequence -- the higher the number the better. Each chart has three graphs showing the PSNR numbers for the red, green and blue color channels.

The CineForm code at 380Mb/s (keying mode) and HDCAM-SR both show color channels with similar levels of distortion, although each CineForm channel outperforms HDCAM-SR's by nearly 2dB. What may seem confusing are the results on the middle chart; the lower bit-rate CineForm clip has higher distortion on red and blue, yet green still outperforms HDCAM-SR. This is a experiment in exploiting the human visual model, which YUV does so well and RGB so poorly. Basically we (humans) have a much harder time seeing anything in blue and red channels, so we reduced the data rate on these channels (note: they are still full resolution) to see if anyone would notice (no one reported this in the green screen tests.) The reason this works is to look at the luminance charts; luminance was calculated using ITU.709 coefficients and the PSNR distortion measured these results:

The CineForm code at 380Mb/s (keying mode) and HDCAM-SR both show color channels with similar levels of distortion, although each CineForm channel outperforms HDCAM-SR's by nearly 2dB. What may seem confusing are the results on the middle chart; the lower bit-rate CineForm clip has higher distortion on red and blue, yet green still outperforms HDCAM-SR. This is a experiment in exploiting the human visual model, which YUV does so well and RGB so poorly. Basically we (humans) have a much harder time seeing anything in blue and red channels, so we reduced the data rate on these channels (note: they are still full resolution) to see if anyone would notice (no one reported this in the green screen tests.) The reason this works is to look at the luminance charts; luminance was calculated using ITU.709 coefficients and the PSNR distortion measured these results:

So even the 320Mb/s CineForm has a "perceptively" higher quality than HDCAM-SR. Note: This is a poor -man's human visual model simulation, for anyone wanting to run further picture quality analysis, we can make the sequences available. I'm sure the lossless/uncompressed die-hards would be ever maddened by this analysis (I've got more coming in a follow up post), but the human eye is not a scientifically calibrated imaging instrument, it allows for its deficiencies to be exploited with little consequences. We will be likely offering both compression modes you we can choose to allocate your bit-rate as your project needs, even if you will never notice the difference.